How are you going to obtain GPT-5 degree inference in workloads utilizing real-world long-context instruments with out the quadratic consideration and GPU prices that sometimes make these programs impractical? DeepSeek analysis is introduced DeepSeek-V3.2 and DeepSeek-V3.2-Speciale. These are inference-first fashions constructed for brokers, concentrating on high-quality inference, lengthy context, and agent workflows, with open weights and operational APIs. This mannequin combines DeepSeek Sparse Attendant (DSA), an enhanced GRPO reinforcement studying stack, and agent-native instruments protocols to report efficiency akin to GPT 5. DeepSeek-V3.2-Particular Attain Gemini 3.0 Professional degree inference in public benchmarks and contests.

Sparse consideration with almost linear lengthy context prices

each DeepSeek-V3.2 and DeepSeek-V3.2-Speciale We use the DeepSeek-V3 Combination of Consultants transformer inherited from V3.1 Terminus with roughly 671B whole parameters and 37B lively parameters per token. The one structural change is the DeepSeek Sparse Attend launched by steady pre-training.

DeepSeek sparse consideration divides consideration into: two elements. a lightning indexer Run a small variety of low-precision heads on each token pair and generate a relevance rating. a Advantageous-grained selector We keep the place of the top-k key values for every question, and the primary consideration path performs multi-query consideration and multi-head latent consideration on this sparse set.

This adjustments the dominant complexity from O(L²) to O(kL). Right here, L is the size of the sequence and ok is the variety of chosen tokens, which is way smaller than L. Based mostly on benchmarks, DeepSeek-V3.2 It matches the dense Terminus baseline when it comes to accuracy, reduces inference prices for lengthy contexts by about 50%, and delivers quicker throughput and decrease reminiscence utilization on H800-class {hardware} and vLLM and SGLang backends.

DeepSeek sparse consideration pre-training continues

DeepSeek’s sparse consideration (DSA) is launched by way of steady pre-training. DeepSeek-V3.2 the final cease. Throughout the dense warmup part, dense consideration stays lively, all spine parameters are frozen, and solely the lightning indexer is skilled with Kullback-Leibler loss to match the distribution of dense consideration on the 128K context sequence. This stage makes use of a small variety of steps and about 2 billion tokens, which is sufficient for the indexer to be taught a helpful rating.

Within the sparse part, the selector holds 2048 key-value entries per question, the spine is unfrozen, and the mannequin continues to coach with roughly 944B tokens. Indexer gradients come up solely from alignment losses with cautious consideration to chose areas. With this schedule, DeepSeek’s sparse consideration (DSA) works as a substitute for dense consideration with comparable high quality and decrease lengthy context value.

GRPO with greater than 10% RL compute

Along with sparse structure, DeepSeek-V3.2 makes use of group relative coverage optimization (GRPO) as its main reinforcement studying technique. After the coaching, the analysis group mentioned: reinforcement studying RL compute is over 10% of pre-training compute.

RL is organized round specialised fields. The analysis group will prepare specialised execution in arithmetic, aggressive programming, common logical reasoning, shopping and agent duties, and security, distilling these specialists into a standard 685B parameter base. DeepSeek-V3.2 and DeepSeek-V3.2-Speciale. GRPO is carried out utilizing an unbiased KL estimator, off-policy sequence masking, and a preserving mechanism. mixture of consultants (MoE) Constant routing and sampling masks between coaching and sampling.

Agent information, considering modes, and gear protocols

The DeepSeek analysis group is constructing a big artificial agent dataset by producing over 1,800 environments and over 85,000 duties throughout code brokers, search brokers, common instruments, and code interpreter setups. The duties are constructed to be tough to resolve and simple to confirm, and are used as RL targets together with precise coding and search traces.

When reasoning, DeepSeek-V3.2 Introduce express considering and non-thinking modes. The deepseek-reasoner endpoint exposes a considering mode by default, the place the mannequin generates an inside chain of ideas earlier than the ultimate reply. The Considering with Instruments information explains how inference content material is preserved throughout device invocations and cleared when new person messages arrive, and the way device invocations and gear outcomes stay in context even when inference textual content is trimmed to suit funds.

Chat templates have been up to date based mostly on this conduct. of DeepSeek-V3.2 Particular The repository ships with Python encoder and decoder helpers as an alternative of Jinja templates. Messages can embrace a reasoning_content area together with the content material, managed by the considering parameter. The developer position is reserved for search brokers and isn’t accepted within the common chat move by way of the official API. This protects this channel from unintentional abuse.

Benchmarks, competitions, open artifacts

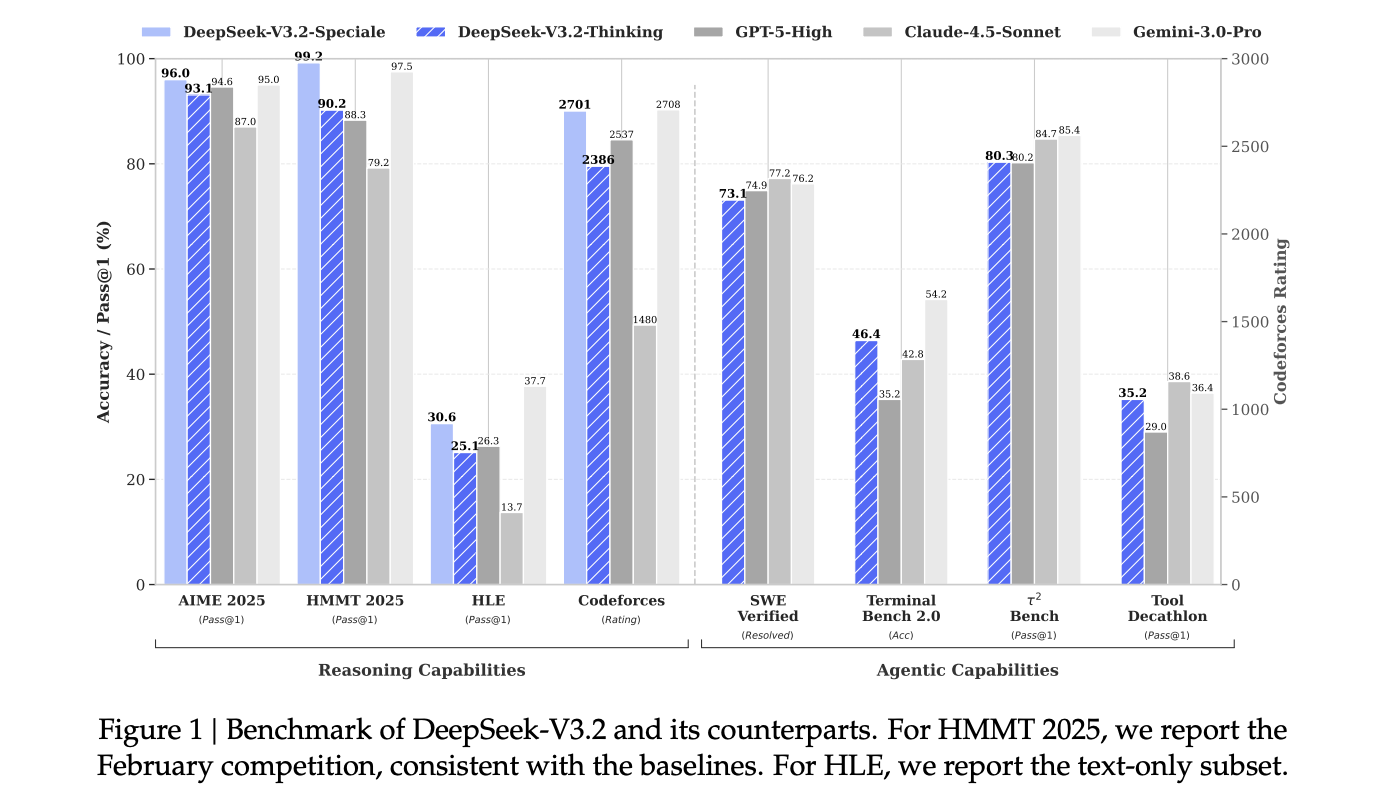

Commonplace reasoning and coding benchmarks DeepSeek-V3.2 and particularly DeepSeek-V3.2 Speciale Suites corresponding to AIME 2025, HMMT 2025, GPQA, and LiveCodeBench are reported to be akin to GPT-5 and near Gemini-3.0 Professional, making them less expensive for long-context workloads.

As for the formal competitors, the DeepSeek analysis group says: DeepSeek-V3.2 Speciale He achieved gold medal-level outcomes on the Worldwide Arithmetic Olympiad 2025, Chinese language Arithmetic Olympiad 2025, and Worldwide Informatics Olympiad 2025, and achieved gold-medal degree outcomes on the ICPC World Finals 2025.

Necessary factors

- DeepSeek-V3.2 provides DeepSeek Sparse Attendee, which has near-linear O(kL) consideration prices whereas sustaining comparable high quality to DeepSeek-V3.1 Terminus, decreasing lengthy context API prices by roughly 50% in comparison with earlier dense DeepSeek fashions.

- The mannequin household maintains a MoE spine of 671B parameters with 37B lively parameters per token and exposes a full 128K context window within the manufacturing API. This makes lengthy documentation, a number of step chains, and huge device traces sensible slightly than a lab-only function.

- Group Relative Coverage Optimization (GRPO) after coaching is used with compute budgets larger than 10% of pre-training, with contest-style specialists targeted on arithmetic, code, common inference, shopping or agent workloads, and security, the place circumstances are launched for exterior validation.

- DeepSeek-V3.2 is the primary mannequin within the DeepSeek household to immediately combine considering into device utilization, supporting each considering and non-thinking device modes and a protocol the place inside reasoning persists throughout device invocations and is just reset on new person messages.

Please test paper and model weights. Please be happy to test it out GitHub page for tutorials, code, and notebooks. Please be happy to comply with us too Twitter Remember to hitch us 100,000+ ML subreddits and subscribe our newsletter. hold on! Are you on telegram? You can now also participate by telegram.

Asif Razzaq is the CEO of Marktechpost Media Inc. As a visionary entrepreneur and engineer, Asif is dedicated to harnessing the potential of synthetic intelligence for social good. His newest endeavor is the launch of Marktechpost, a synthetic intelligence media platform. It stands out for its thorough protection of machine studying and deep studying information, which is technically sound and simply understood by a large viewers. The platform boasts over 2 million views monthly, demonstrating its reputation amongst viewers.