Transformers use a mix of consideration and experience to scale computation, however there’s nonetheless no native approach to carry out information search. Recomputing the identical native sample over and over wastes depth and FLOPs. DeepSeek’s new Engram module targets precisely this hole by including a conditional reminiscence axis that operates in parallel to MoE, reasonably than changing it.

At a excessive stage, Engram modernizes the traditional N gram embedding and transforms it right into a scalable O(1) lookup reminiscence that connects on to the Transformer spine. The result’s a parametric reminiscence that shops static patterns resembling frequent phrases and entities, and the spine focuses on harder inferences and long-range interactions.

How Engram suits into DeepSeek Transformer

The proposed strategy makes use of DeepSeek V3 tokenizer with 128k vocabulary and pre-trains with 262B tokens. The spine is a 30 block transformer with hidden measurement 2560. Every block makes use of 32 heads of multihead latent consideration and connects to the feedforward community by a manifold constrained hyperconnection with an enlargement issue of 4. Use the Muon optimizer for optimization.

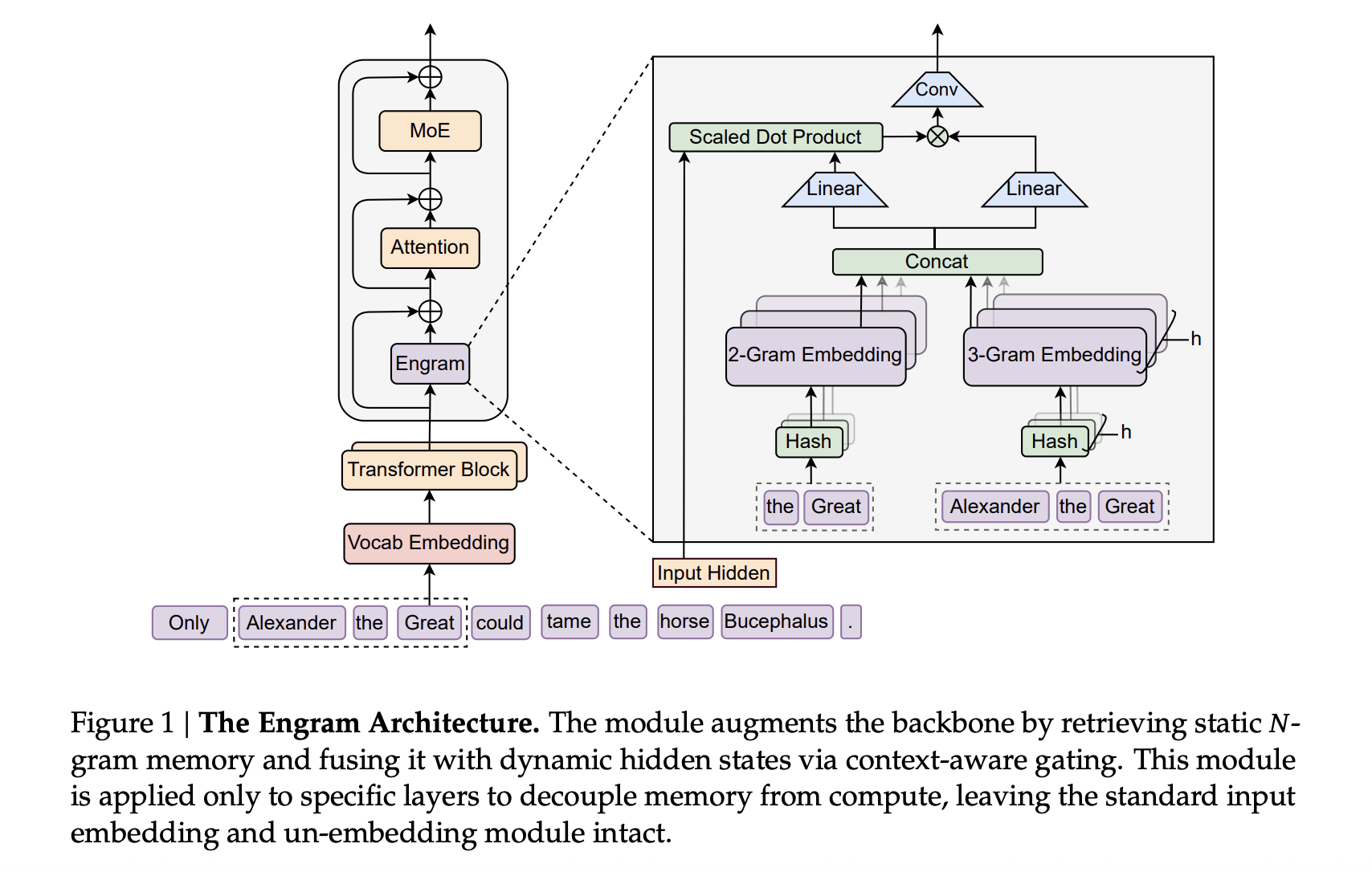

Engram connects to this spine as a sparse embedding module. It’s constructed from a hashed N-gram desk, with multi-head hashing into prime-sized buckets, a small depthwise convolution over the N-gram context, and a context-aware gate scalar starting from 0 to 1 that controls how a lot of the captured embedding is injected into every department.

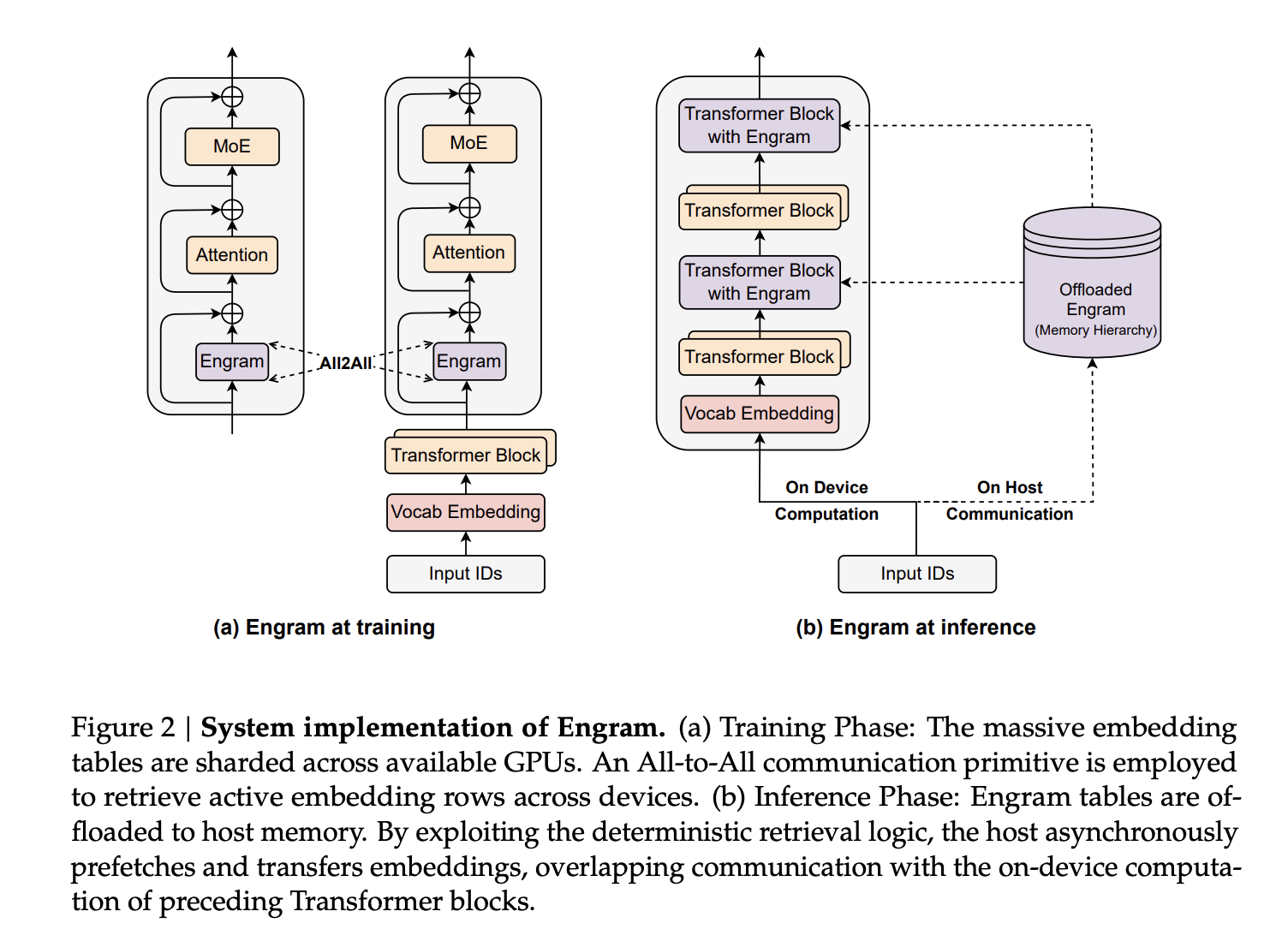

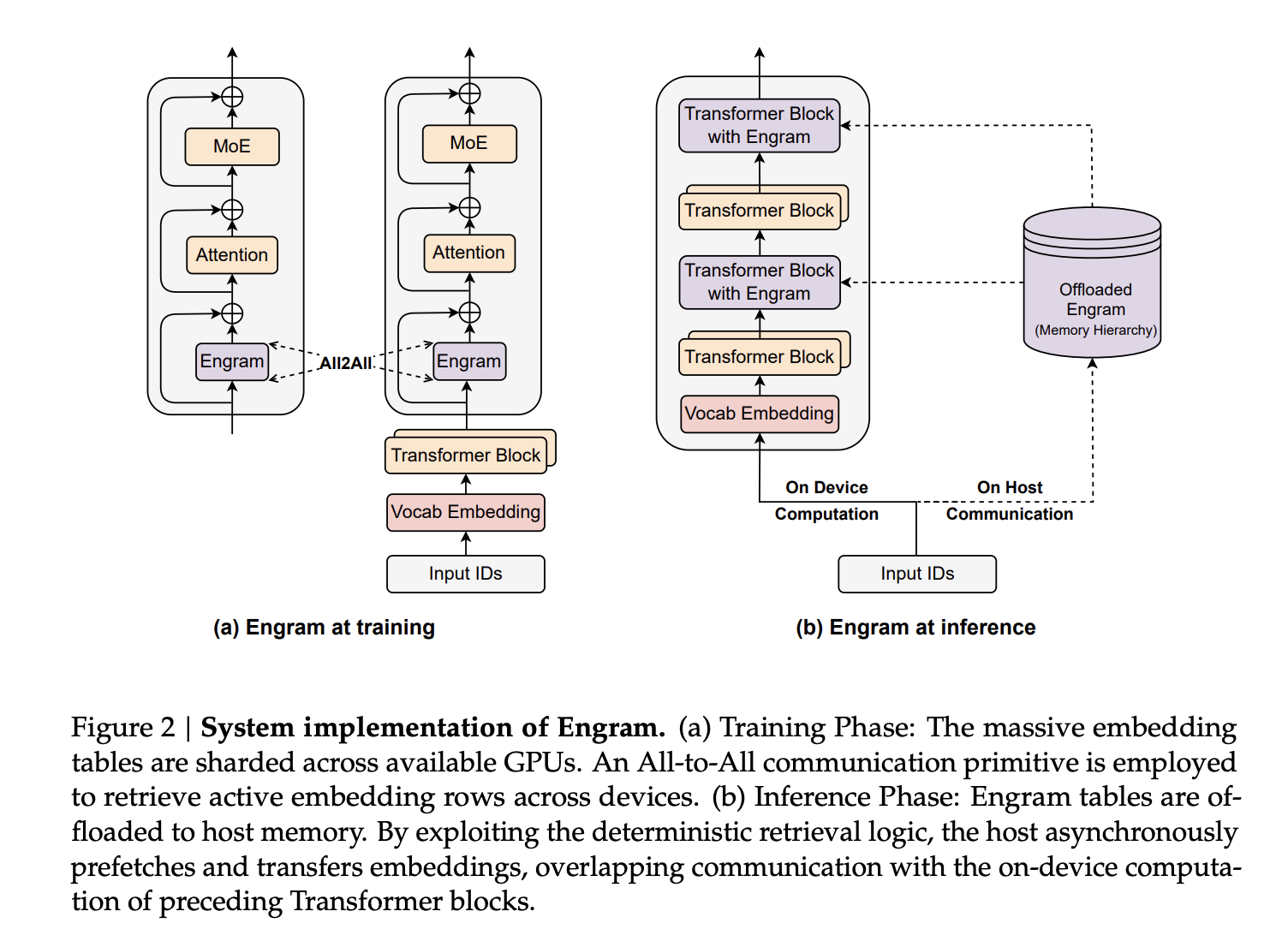

In bigger fashions, Engram-27B and Engram-40B share the identical transformer spine as MoE-27B. MoE-27B makes use of 72 routed consultants and a pair of shared consultants to switch dense feedforward with DeepSeekMoE. Engram-27B reduces the variety of routed consultants from 72 to 55 and reallocates these parameters to five.7B of engram reminiscence whereas preserving the overall parameters at 26.7B. The engram module makes use of N equal to {2,3}, 8 engram heads, dimension 1280, and is inserted at layers 2 and 15. Engram 40B will increase engram reminiscence to 18.5B parameters whereas preserving the enabled parameters mounted.

Sparsity Allocation, second scaling knob subsequent to MoE

A central design query is easy methods to divide the sparse parameter finances between routed consultants and conditional reminiscence. The analysis staff formalized this as a sparsity project drawback, defining the project ratio ρ because the proportion of inactive parameters assigned to consultants within the Ministry of Schooling. The pure MoE mannequin has ρ of 1. Reducing ρ reassigns the parameters from the professional to the engram slots.

For the medium-sized 5.7B and 9.9B fashions, sweeping ρ yields a transparent U-shaped curve of validation loss versus allocation ratio. The engram mannequin matches the pure MoE baseline even when ρ is lowered to about 0.25. This equates to about half of the variety of rooted professionals. Optimum circumstances happen when roughly 20-25 p.c of the sparse finances is given to engrams. This optimum is steady throughout each computational domains, suggesting a good partitioning of conditional computation and conditional reminiscence beneath mounted sparsity.

The analysis staff additionally studied an infinite reminiscence regime on a set 3B MoE spine educated for 100B tokens. These scale the engram desk from roughly 2.58e5 to 1e7 slots. The validation loss follows a virtually good energy legislation in log house. Which means extra conditional reminiscence will proceed to have an impact with none extra computation. Engram outperforms OverEncoding, one other N-gram embedding technique that averages over lexical embeddings, beneath the identical reminiscence finances.

Outcomes of intensive pre-training

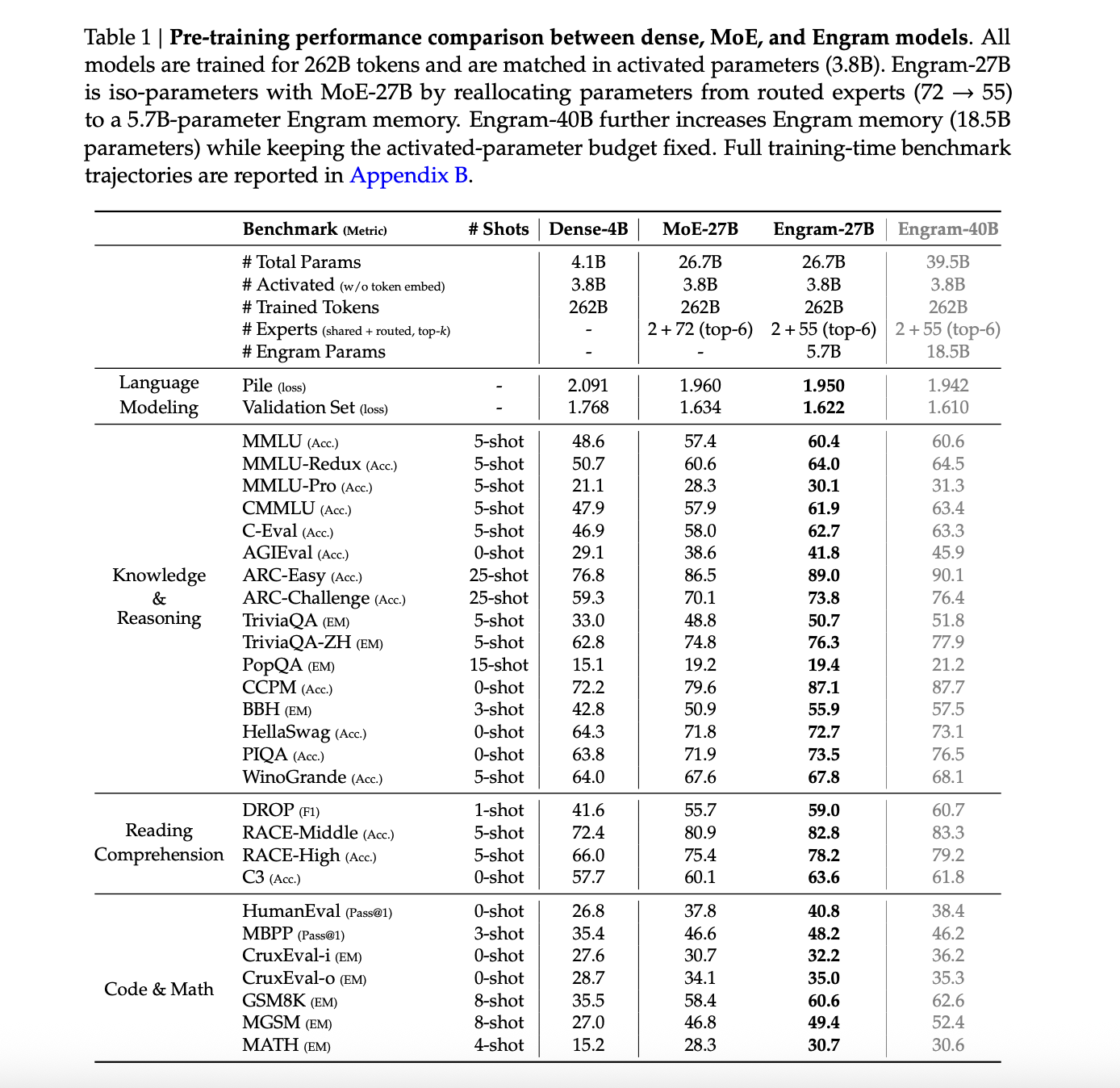

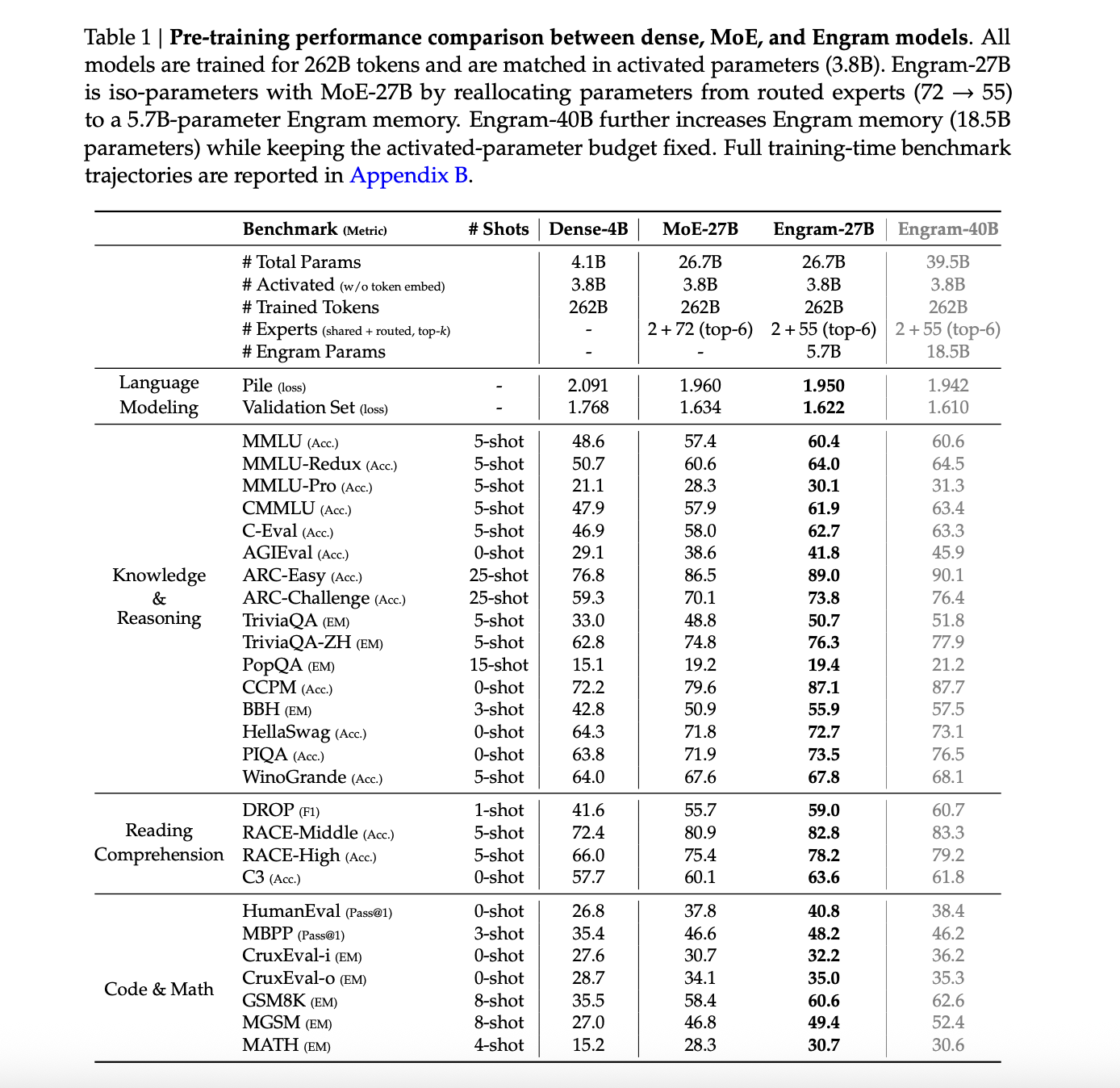

The principle comparability includes 4 fashions educated with the identical 262B token curriculum, with an activation parameter of three.8B in all instances. These are Dense 4B with a complete parameter of 4.1B, MoE 27B and Engram 27B with a complete parameter of 26.7B, and Engram 40B with a complete parameter of 39.5B.

On The Pile check set, the language modeling loss is 2.091 for MoE 27B, 1.960 for Engram 27B, 1.950 for Engram 27B variant, and 1.942 for Engram 40B. No losses have been reported for dense 4B piles. The validation loss for the inner holdout set drops from 1.768 for MoE 27B to 1.634 for Engram 27B, and to 1.622 and 1.610 for the Engram variants.

Throughout information and reasoning benchmarks, Engram-27B constantly improves over MoE-27B. MMLU will increase from 57.4 to 60.4, CMMLU will increase from 57.9 to 61.9, and C-Eval will increase from 58.0 to 62.7. ARC Problem elevated from 70.1 to 73.8, BBH elevated from 50.9 to 55.9, and DROP F1 elevated from 55.7 to 59.0. Code and math duties additionally improved, together with HumanEval from 37.8 to 40.8 and GSM8K from 58.4 to 60.6.

The authors observe that Engram 40B is probably going undertrained on 262B tokens, as coaching losses proceed to deviate from the baseline close to the top of pre-training, however Engram 40B sometimes pushes these numbers additional.

Results of lengthy context conduct and mechanisms

After pre-training, the analysis staff used YaRN to increase the context window to 32768 tokens in 5000 steps utilizing 30B high-quality lengthy context tokens. They examine MoE-27B and Engram-27B at checkpoints similar to 41k, 46k, and 50k pre-training steps.

For LongPPL and RULER in 32k context, Engram-27B matches or outperforms MoE-27B beneath three circumstances. On about 82 p.c of pre-training FLOPs, Engram-27B with 41k steps matches LongPPL and improves RULER accuracy. For instance, multi-query NIAH 99.6 vs. 73.0, QA 44.0 vs. 34.5. Beneath iso loss at 46k and iso FLOP at 50k, Engram 27B improves each complexity and all RULER classes together with VT and QA.

Mechanism evaluation makes use of LogitLens and Centered Kernel Alignment. Engram variants present variations in KL with respect to decrease layers between intermediate logits and last predictions, particularly in early blocks. Which means the illustration can be prepared for prediction sooner. The CKA similarity map reveals that the shallow engram layer finest matches the deeper MoE layer. For instance, layer 5 of Engram-27B matches round layer 12 of the MoE baseline. Taken collectively, this helps the view that Engram successfully will increase mannequin depth by offloading static reconstruction to reminiscence.

For an ablation research of a 12-layer 3B MoE mannequin with an activation parameter of 0.56B, add 1.6B engram reminiscence as a reference configuration, use N equal to {2,3}, and insert engrams in layers 2 and 6. Sweeping by a single engram layer in depth, we discover that early insertion at layer 2 is perfect. Part ablation highlights three key parts: multi-branch integration, context-aware gating, and tokenizer compression.

Sensitivity analyzes present that factual information is extremely depending on engrams, and when engram output is suppressed throughout inference, TriviaQA scores drop to about 29 p.c of the unique scores, whereas studying comprehension duties keep efficiency at about 81 to 93 p.c (e.g., C3 is 93 p.c).

Vital factors

- Engram provides a conditional reminiscence axis to sparse LLMs to permit steadily occurring N-gram patterns and entities to be retrieved with O(1) hash lookups. Transformer spine and MoE consultants, however, concentrate on dynamic inference and long-range dependencies.

- Beneath mounted parameters and a FLOP finances, reallocating roughly 20-25 p.c of the sparse capability from MoE consultants to engram reminiscence reduces validation loss, indicating that conditional reminiscence and conditional computation are complementary reasonably than competing.

- On large-scale pre-training of 262B tokens, Engram-27B and Engram-40B with the identical 3.8B activation parameters outperform the MoE-27B baseline on language modeling, information, inference, code and math benchmarks with out altering the Transformer spine structure.

- Lengthy context extension to 32768 tokens utilizing YaRN reveals that Engram-27B matches or improves on LongPPL and clearly improves RULER scores, particularly multi-query needle and variable monitoring in haystacks, even when educated with decrease or comparable compute in comparison with MoE-27B.

Please examine paper and GitHub repository. Please be at liberty to observe us too Twitter Remember to affix us 100,000+ ML subreddits and subscribe our newsletter. dangle on! Are you on telegram? You can now also participate by telegram.

Take a look at the most recent releases ai2025.devis a 2025-focused analytics platform that transforms mannequin launches, benchmarks, and ecosystem exercise into structured datasets that may be filtered, in contrast, and exported.

Asif Razzaq is the CEO of Marktechpost Media Inc. As a visionary entrepreneur and engineer, Asif is dedicated to harnessing the potential of synthetic intelligence for social good. His newest endeavor is the launch of Marktechpost, a synthetic intelligence media platform. It stands out for its thorough protection of machine studying and deep studying information, which is technically sound and simply understood by a large viewers. The platform boasts over 2 million views per 30 days, demonstrating its recognition amongst viewers.